Job Writer: Drive

To save data into files, use a Drive connection. To use a specific file type, select the desired File Format. The following file types are supported:

- CSV

- JSON

- XML

- Parquet

- Raw

Date Field Identifier

The Date Field Identifier changes which date is used when using date tokens. Date tokens can be used as part of the path and/or file name itself. By default, the date token (if left blank) is set to the job execution date/time. However, to partition data based on the data itself, you can choose a source field that represents a date/time to use instead. Using a source column allows you to group records into time windows, which is useful for loading Delta Lake environments. The following date tokens are avaiable:

- yyyy

- YYYY

- yy

- YY

- mm (month)

- MM (month)

- dd

- DD

- dow

- DOW

- doy

- DOY

- hh

- HH

- nn (minutes)

- NN (minutes)

- ss

- SS

For YY, MM, DD, DOY, HH, NN and SS, upper-case values force leading zeroes to be added when needed.

Path and File Name

You can provide a specific path or folder in the Path Override field to write the file into. The File Name should include the file extention; it is not automatically added. Both fields accept DataZen functions to control where files will be created.

For example, the following settings will create a target folder every year, based on the Date_of_Birth field, and a seperate file per country field.

- Date Field Identifier: Date_of_Birth

- Path Override: c:\tmp\csv\[yyyy]\

- File Name: customer_{{country}}.txt

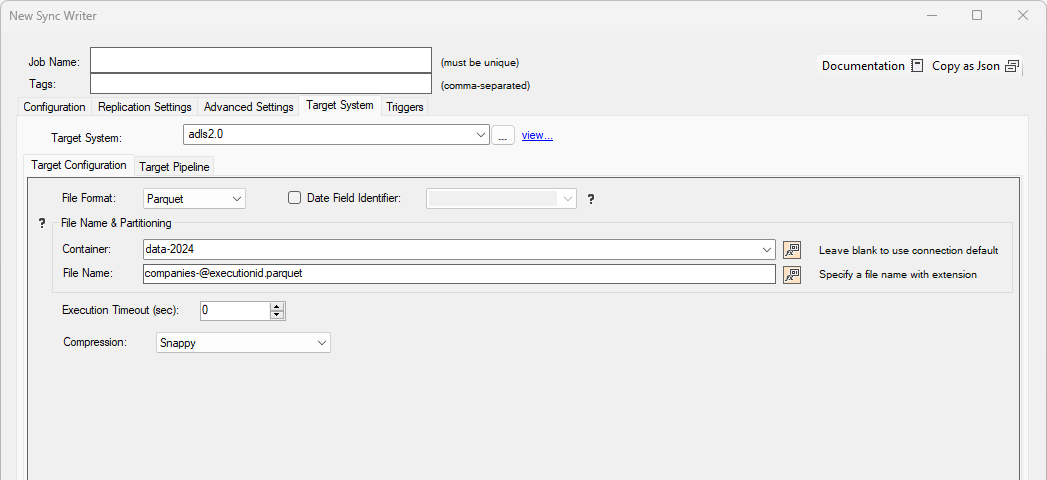

Example: Parquet Target

In this example, the settings use a Parquet file target in ADLS using the specified Container. The name of the file will be different for each execution since the name contains the @executionid variable.

The Parquet will use the Snappy compression algorythm. The Date Field Identifier used will be the execution date/time of the job; however, since no date token is being used this setting will be ignored.

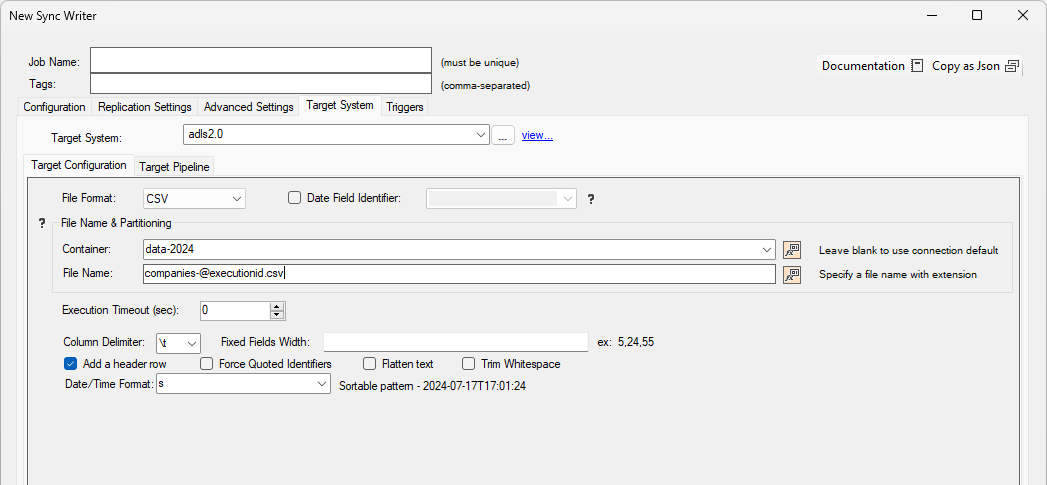

Example: CSV Target

In this example, the settings use a CSV file target in ADLS using the specified Container. The name of the file will be different for each execution since the name contains the @executionid variable.

The CSV file will be a delimited file (since no fixed-length fields are specified). In addition, a header row will be added, and any date fields will be formatted using a sortable pattern.