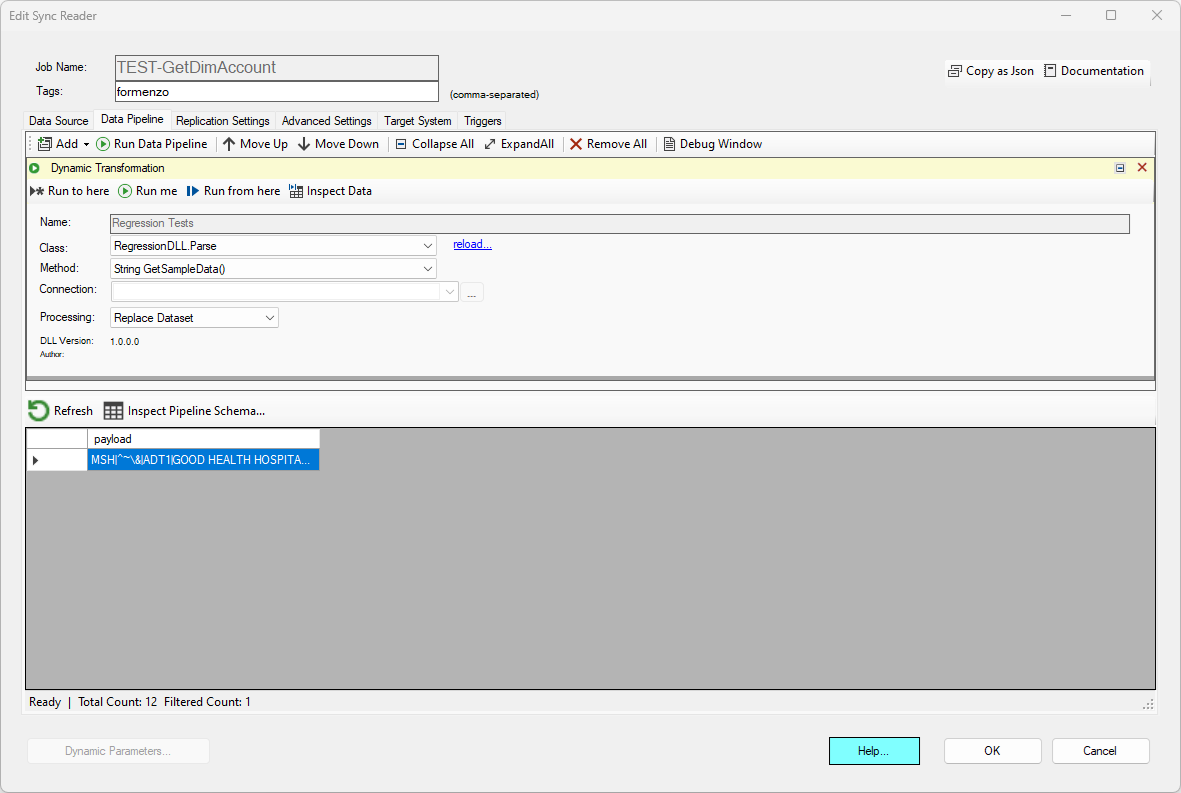

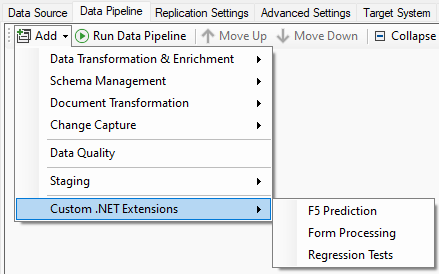

Custom Extensions

Custom extensions are .NET DLL programs that have been developed according to the specifications provided in the Develop .NET Extensions section. After building a .NET extension, and importing it as a extension, it will become available under the Add -> Custom .NET Extensions menu.

The actual operation performed by the .NET extension is entirely up to the developer of the extension. Once you have loaded the .NET Extension in the data pipeline, choose the Class and Method to execute. The assignment of input parameters to the method selected is automatic. However, if a string data type is available, the Connection dropdown box will become available; choosing a connection from this list will send the entire connection string, decrypted, to the method selected.

Make sure you trust the developer providing the DLL when sending connection strings as a parameter.

For security reasons this component is not available to shared cloud agents; you must either use a self-hosted agent or use a dedicated cloud agents.

Finally, choose the Processing Mode to either Continue (the pipeline data set will remain unchanged) or Replace to complete replace the data set with the output provided by the method. The Replace option is only valid if the method returns a DataTable, string, or byte[].

Main Use Cases

There are many use cases for which custom .NET extensions make sense, including the desire to encapsulate complex, high performance transformations

that require the use of C or C++ libraries (search Machine Learning algorythms) or implementing advanced encryption/decryption modules.

Another important use-case is the processing of flat files that are specific to an industry, such as HL7 health care data sets.

Finally, custom components can be used to extend DataZen to read data from a source system that is not yet supported. For example, you may read from

EDBCDIC files on a mainframe computer externally using a .NET component.

Example

In this example, the component returns a custom HL7 record to be further processed by the data pipeline.