Data Pipeline

This section is specific to building ETL pipelines using the management UI. To view all available options using SQL CDC, see the SQL CDC section. While most of the ETL operations are available, SQL CDC offers additional options.

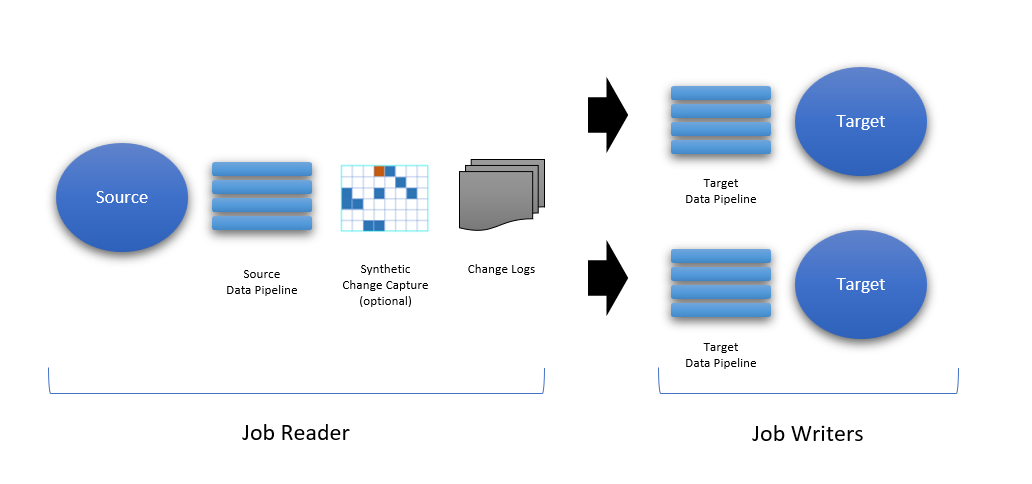

Job Pipelines can define Data Pipelines that translate, transform, and enhance a dataset on the fly before being saved to the change log and/or sent to a target system. When applied at the source, the Data Pipeline executes before the change capture, but after the high watermark is applied against the source.

The execution of the Data Pipeline follows the order in which the pipeline components are listed. You can change the order of each component by using the up/down arrows when using the management interface.

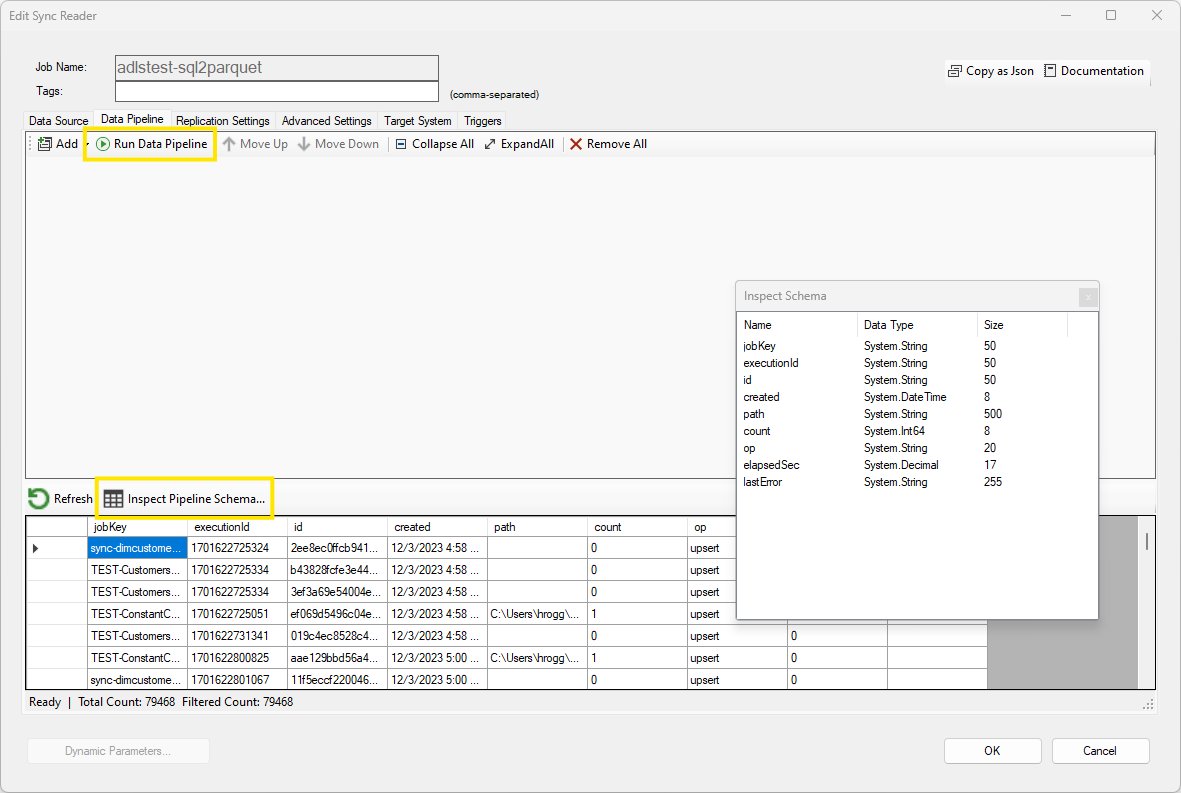

To preview the data pipeline, click on the Run Data Pipeline button. Since the Target System uses the output of the data pipeline, if one is defined, it is important to execute the data pipeline before configuring the target system options.

When defining a Direct Job, two separate Data Pipelines can be defined: one on the source and another on the target. The separation of the reader and writer operation allows multiple data pipelines to be executed, providing the flexibility needed to apply different processing logic based on the target. For example, if one of the targets is a TEST environment, the target data pipeline for the TEST system could mask the data before pushing the data.

Building a Data Pipeline

The data pipeline interface provides a canvas for adding components that can translate or transform the data using pre-built data engineering functions. When the data pipeline is empty, you can run the pipeline to preview the source data; clicking on the Run Data Pipeline button executes the full pipeline. You can also inspect the schema of the data set at any time.

Running the data pipeline requires the reader (or writer) to have some sample data available; a warning will be displayed if no data is currently available.

To add pipeline components, click on the Add button and choose the component to add.

Using a Component

When building or modifying a job, you can inspect and test your data pipeline. In order to test the data pipeline, you need to have some data available from the source system; this is normally done by running a preview operation. Once some data is available, you can run a data pipeline in full, up to a specific component, or even run a single component. In addition, the data set schema can be inspected at that point by clicking on the Inspect Data Schema button. Keep in mind however that when running the data pipeline partially, the schema may be incomplete and certain options in the Target screen may not show the final schema.

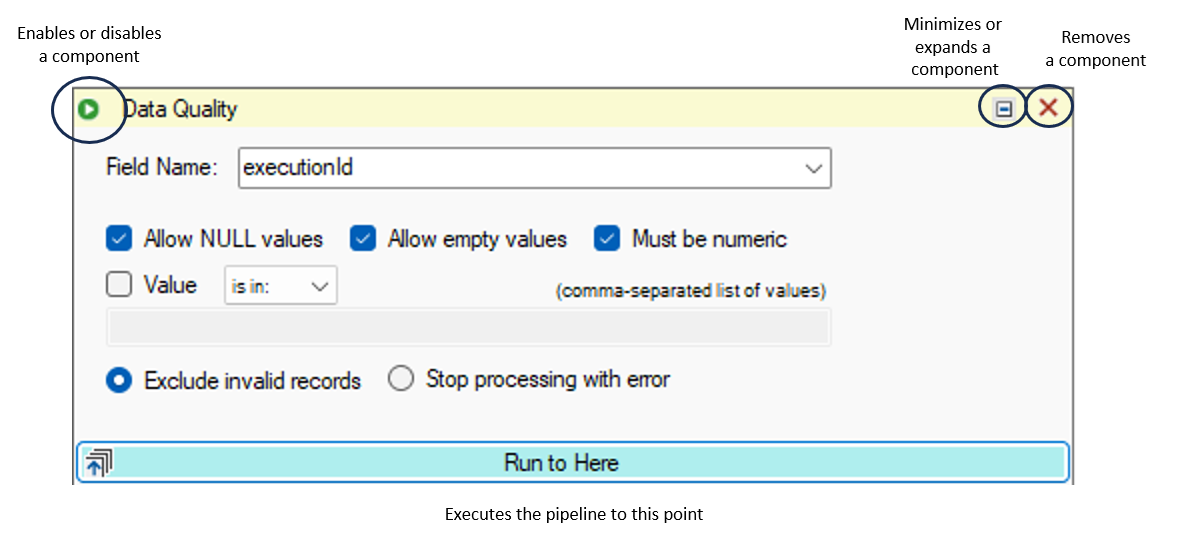

Each component comes with a menu allowing you to perform the following operations:

- Run to here: Run all previous components including the selected component, then stop processing the data pipeline

- Run me: Run the selected component only; for this option to be available, the previous component must have been executed at least once

- Run from here: Run all remaining components including the selected component

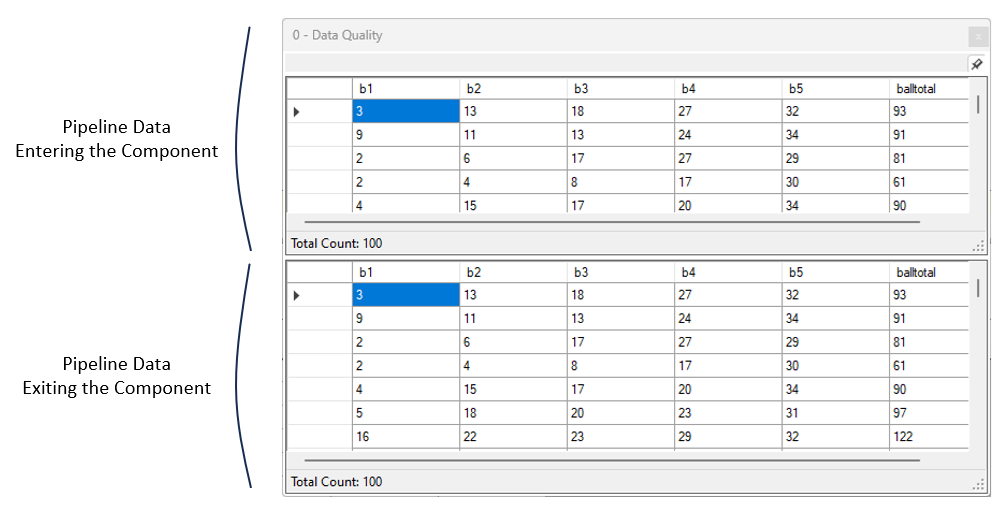

- Inspect Data: Open a debugging window showing the data entering and leaving the component

You can disable and reenable a component by clicking on the top left icon; this allows you to test your pipeline without the component if needed.

When clicking on Inspect Data a window will appear for the selected component. You can open this window for multiple components. As data flows through the component, the input and output data sets will appear. If nothing is displayed, the component has not yet executed. To keep this window in front, click on the pin icon.

Local vs. Agent Execution

Data Pipelines execute on the machine they are executed on. If a job is running in the cloud for example, the Data Pipeline components will also be executed in the cloud. However, running a Data Pipeline from DataZen Manager while creating or editing a job will execute the components from your local machine. Due to security differences between your local machine and the agent's, it may be possible for some components to fail on the DataZen Agent, for example if firewall rules prevent connections from cloud locations.

Pipeline Components

The pipeline components available provide advanced data engineering functions that accelerate integration projects.

You can also add your own custom .NET components as needed for more advanced scenarios or high performance needs.

You can extend Data Pipelines in two ways: use an HTTP/S Endpoint component or a .NET Extension.

Extending you data pipelines gives you the ability to enhance your company's data engineering and data management capabilities.

For example, if your company has published a Python

AWS Lambda Function or an Azure Function using your company's proprietary AI/ML model, you could easily call the HTTP/S endpoint inline as

part of your data pipeline execution.

The Custom .NET Extension option is not available for shared Cloud Agents. However, dedicated cloud agents and self-hosted agents can use this option.

| Category | Component | Comments |

|---|---|---|

| Data Transformation | Data Filter | Applies a client-side filter to the data by adding a SQL Where clause, a JSON/XML filter, or a regular expression as a Data Filter |

| Data Transformation | Data Masking | Applies masking logic to a selected data column, such as credit card number or a phone number. Supports generating random numbers, free-form masking, and generic / full masking |

| Data Transformation | Data Hashing | Applies a hash algorithm to a selected data column (must be a string data type); supported hashing algorithms are MD5, SHA1, SHA256, SHA384 and SHA512 |

| Data Transformation | HTTP/S Endpoint Function | Calls an external HTTP/S function or endpoint, and adds the results to the output or merges it with the input data. |

| Schema Management | Apply Schema | Transforms the data set schema as specified with optional default values and DataZen function calls. |

| Schema Management | Dynamic Data Column | Adds a column dynamically using a simple SQL formula, or a DataZen Function. |

| Schema Management | Keep/Remove Columns | Quickly remove undesired columns from the data set. |

| Transformation | JSON/XML to Table | Convert an XML or JSON document into a data set of rows and columns. |

| Transformation | CSV to Table | Convert a flat file document into a data set of rows and columns. |

| Change Capture | Apply Synthetic CDC | Applies an inline Synthetic Change Capture. |

| Staging | Sink Data to SQL | Sinks the current data set to a SQL Server table, optionally appending, truncating, or recreating it with automatic schema management. |

| Staging | Run SQL Command | Runs a SQL batch on the fly and optionally uses the output as the new data set in the pipeline. Can use @pipelinedata() to access the current pipeline data set. |

| Other | Data Quality | Inspects and applies data quality rules on the current data set. |

| Other | Custom .NET Extensions | Calls an external .NET DLL, passing the current data set, and replaces the pipeline data set with the output provided if desired. |